Important Note: The following information is subject to updates.

AIMC 2025 features an outstanding panel of keynote speakers, offering insights into AI-driven creativity, human-AI collaboration, and the future of generative music.

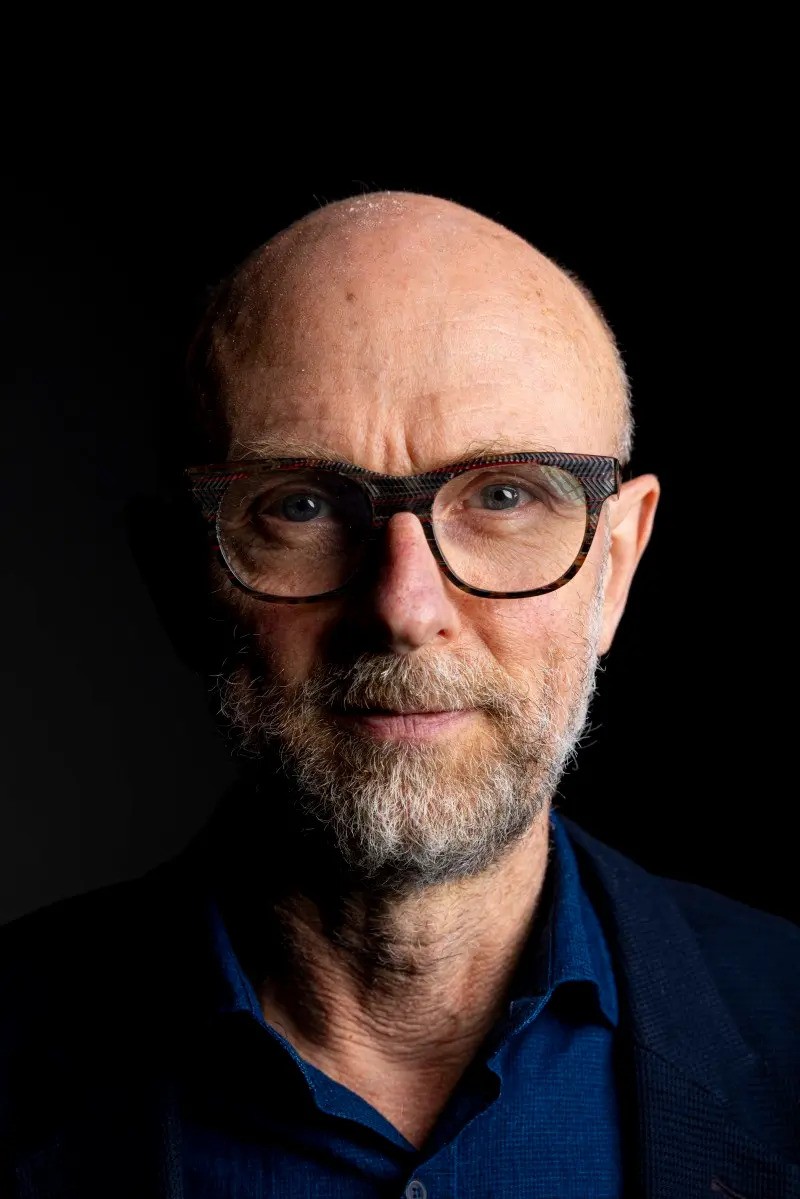

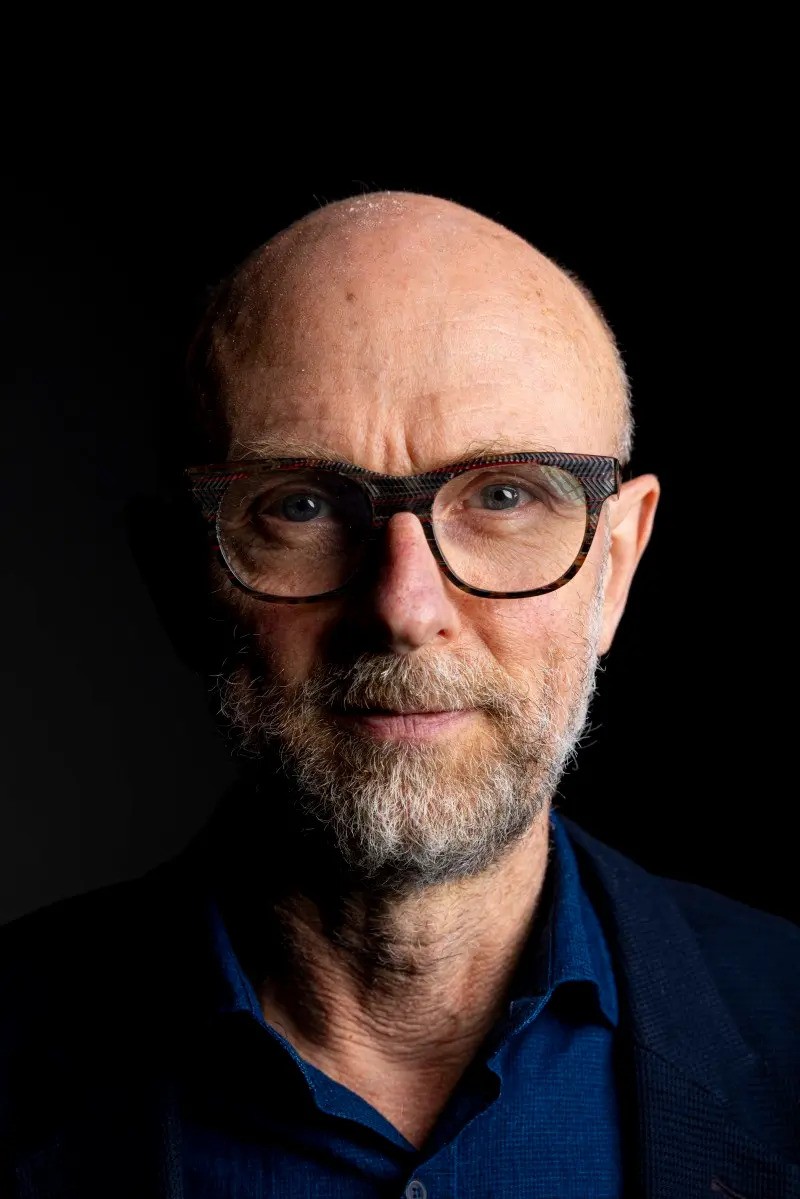

Marc Leman

Bio Marc Leman is is a professor of musicology at Ghent University, specializing in the theory and methodology of human interaction with music—what people do with music and, more importantly, what music does to people. Considered by some as a pioneer in computational and embodied musicology, Leman now focuses on the statistical analysis of high-dimensional music data. He founded the Art and Science Lab at De Krook, a European top lab for studying human interaction in virtual reality with high-performance 3D audio and motion capture facilities. He has published over 450 articles and books (including at MIT Press). In 2015, he received the Ernest-John Solvay Prize for Language, Culture, and Social Sciences from FWO and held the Francqui Chair at the Université de Mons in 2014. He was also a laureate of the Methusalem programme (KU Leuven) from 2007 to 2023.

Music Playing as a Paradigm for Intelligent Interaction: A Challenge for AI

Abstract

In this talk, I shall argue that music playing offers an intriguing paradigm and playground for developing intelligent interaction systems. Music playing requires a specific form of intelligence based on sensorimotor controls embedded in prediction schemes.

Understanding music playing provides a foundation for developing machine assistants, partners, and collaborators as interactive agents, exoskeletons, or stand-alone robots. Such systems may be beneficial beyond music, in domains that require human training, re-learning, body extensions, and joint human-machine actions.

Recent studies have revealed that human interactions can be manipulated at a subliminal level using biofeedback. This biofeedback can use auditory or musical modifications of expected perception, as well as haptic control of the human motor system. In both ways, biofeedback may cause a change in the response and, consequently, in the interaction, without awareness of the manipulation. Embedded in reinforcement learning, biofeedback can be quite effective.

Examples are used to illustrate the concepts of intelligent interaction and biofeedback.

First, I focus on rhythmic entrainment, or the adaptation to external (musical) rhythms close to the own range of rhythmic movement. In humans, this natural rhythmic entrainment mechanism has been found with two or more people interacting. Analysis reveals that entrainment obeys a controller allowing for the perceived rhythms of the partner to influence the own rhythms. In music playing, rhythmic entrainment is a key feature of interaction intelligence, and crucial in co-regulated ensemble playing. I will argue that flexible dynamic systems allowing for rhythmic synchronization and rhythmic entrainment are key ingredients of intelligent interaction systems.

Next, I give an example of joint action in music playing. In this example, the focus is on haptic controls of the bowing arms of two violinists playing together, using exoskeletons attached to their arms. A bi-directional coupling of the exoskeletons reveals interesting improvement in synchronous violin playing actions, compared to no coupling.

Recent efforts aim at the development of brain-based biofeedback systems, using multimedia devices (including EEG). With AI, it is possible to advance several stages of this development, such as generating music for biofeedback applications, optimize the control of real-time rhythmic/motoric entrainment, and optimize overall learning and reinforcement learning schemes in view of goal achievement. Moreover, brain-based biofeedback typically requires sophisticated feature extraction and data-analysis for capturing brain components relevant for biofeedback.

I show that creative and innovative AI-music systems can get their inspiration from artistic activities because these activities offer a rich and motivating domain for studying biofeedback. Databases of music playing, involving perfectly synchronized motion caption, EEG and body sensors are rare, but they may play a key role in the future development of intelligent interaction systems.

The last part of the talk is formulated as a challenge for the AI-community. Starting from a theory of human music interaction, I argue that intelligent interaction behavior in machines may require solutions for behavior that depends on the neurobiology of pleasure-reward mechanisms, and on the biological constraints that form the basis of biosocial expression and embodiment.

Katerina Kosta

Bio Katerina Kosta is the Head of AI at Hook, building tools that enrich music creativity while ensuring a fair treatment for artists and creators. Before joining Hook, she worked as a senior research scientist at TikTok/Bytedance (Speech Audio Music Intelligence team) and as a machine learning researcher at the London-based startup Jukedeck. Her background and experience include work on AI music generation/understanding and computational musicology and she is an author of various patents and research papers. She pursued her PhD studies at the Centre for Digital Music at Queen Mary University of London on modelling dynamic variations in expressive music performance. She also holds degrees in classical piano and maths.

From Consumption to Creation: Legal and Technical Foundations for an AI-Powered Music Platform

Abstract As digital music consumption increasingly shifts from passive listening to more active forms of creation and expression, there is a growing need for tools that allow anyone to participate meaningfully in the music-making process. Hook Music provides a unique framework where artists, labels, and rights-holders can license their content and retain control over how it is used, while also being able to track engagement and monetize derivative works. At the same time, creators are empowered to remix their favorite songs, blend genres, add effects, and spark new cultural trends. Consumers, in turn, are able to move beyond listening by sharing or interacting with creator-generated content.

The platform's AI-powered tools are ethically trained and designed to support a range of user interactivity. We will explore the technical and legal challenges involved in building such a system, particularly the need for reliable attribution and revenue-sharing mechanisms that link original copyrights with modified outputs. We will also examine current approaches to measuring attribution in music generative AI, and the broader implications for fair compensation and credit within participatory creative ecosystems.